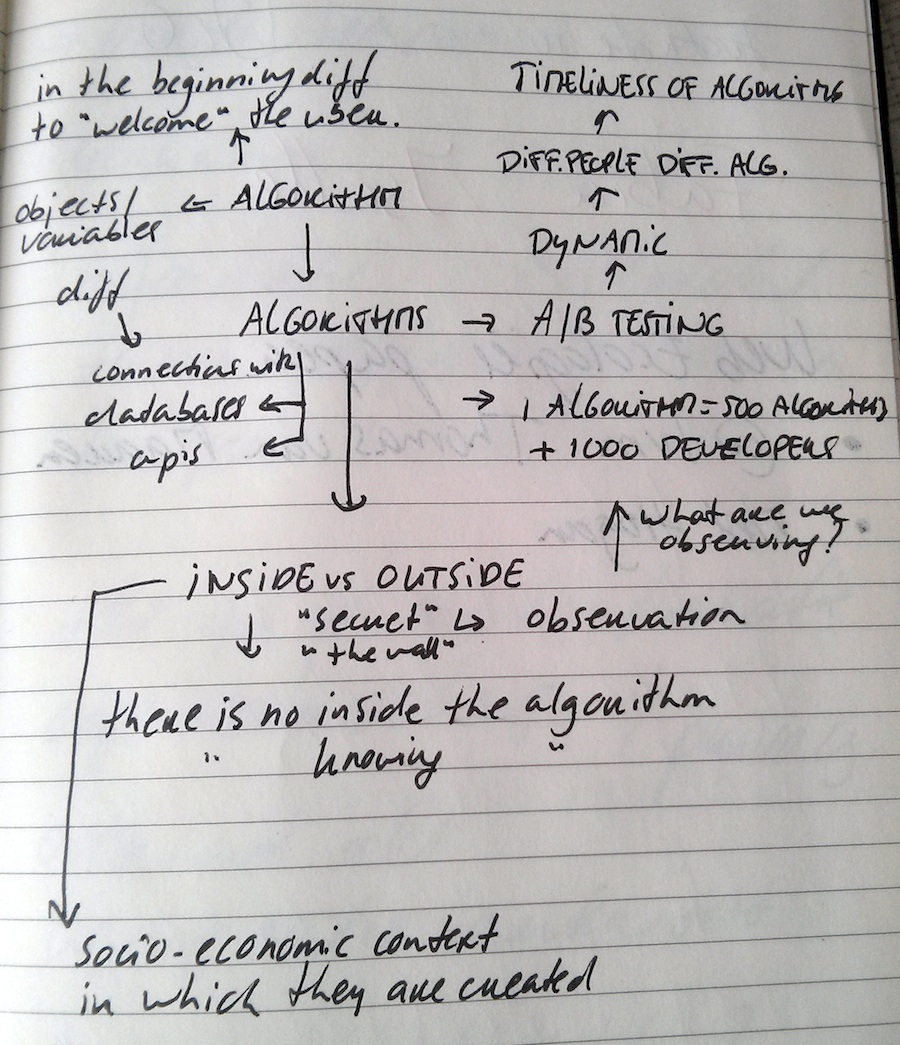

On Saturday, May 4th I attended the ‘The Work of Algorithms’ panel where Nick Seaver talked about Knowing Algorithms. In his talk Seaver discusses the issue of dealing with proprietary algorithms within research and how a focus on this proprietary or ‘black box’ aspect has skewed our criticisms of algorithms. Strands of research dealing with proprietary algorithms, such as Google’s PageRank or Facebook’s EdgeRank, focus on user experimentation and engaging systematically with the system in order to derive findings. Seaver argues how this is problematic since algorithms do not only adapt over time, where in the beginning algorithms behave differently then when they have adjusted to the user, ((Algorithms are different in the beginning to “welcome” the user and to come to “know” the user to be able to a serve certain results or recommend objects.)) but also how algorithms are unstable in themselves as may be seen in the case of A/B testing:

Over the past decade, the power of A/B testing has become an open secret of high-stakes web development. It’s now the standard (but seldom advertised) means through which Silicon Valley improves its online products. Using A/B, new ideas can be essentially focus-group tested in real time: Without being told, a fraction of users are diverted to a slightly different version of a given web page and their behavior compared against the mass of users on the standard site. If the new version proves superior—gaining more clicks, longer visits, more purchases—it will displace the original; if the new version is inferior, it’s quietly phased out without most users ever seeing it. A/B allows seemingly subjective questions of design—color, layout, image selection, text—to become incontrovertible matters of data-driven social science. (Christian 2012) ((“The A/B Test: Inside the Technology That’s Changing the Rules of Business | Wired Business | Wired.com.†2013. Wired Business. Accessed May 13. http://www.wired.com/business/2012/04/ff_abtesting/.))

At the moment there might be 10 million different versions of Bing because of A/B testing with algorithms and their results in realtime, so even if we engage systematically with Bing for research purposes, which Bing are we talking about? Algorithms also change over time as Facebook and their EdgeRank algorithm are constantly in motion: You cannot step into the same Facebook twice.

Some researchers argue that the solution to knowing the algorithm is “behind the wall” and that we can only explain “the outside” or the workings of the algorithm if you have access to the “inside” or the formula. But, Seaver argues, there is no “inside” the algorithm and there is no “knowing” the algorithm without understanding the context in which algorithms are made. We have to understand the context algorithms are created in, which may serve as moments of stabilization as social details become translated into technical details. Therefor, Seaver wants to do an ethnography of engineers to get to know the algorithms.

During the panel discussion Nancy Baym states that we are talking about “the” algorithm, indicating a singular and stable object, while it has become clear that we may be talking about 500 different algorithms, created by 1000 different engineers. Should we talk of algorithmic systems instead of algorithms? This may be a good way into discussing the various objects and variables within algorithms that may each be connected to different databases (through APIs) and therefor become part of various algorithms themselves.